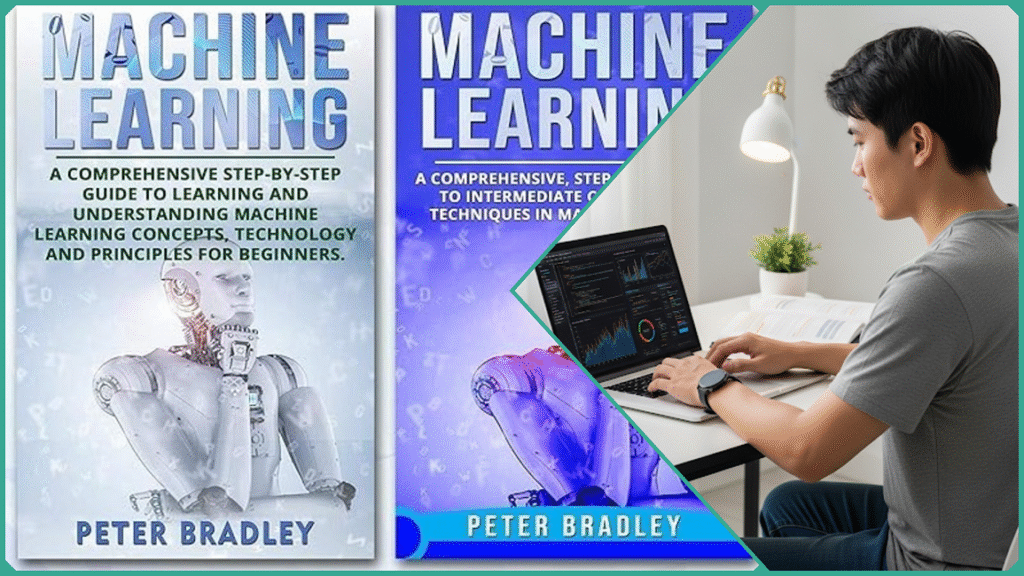

9 Practical Steps of Machine Learning: Beginners to Experts

Estimated reading time: 22 minutes

Machine learning (ML) has transitioned from being a niche topic discussed primarily in academic circles to becoming an integral part of the daily workflows of startups, major tech corporations, and even established traditional industries.

As the demand for professionals with Machine learning skills continues to grow rapidly, many individuals are eager and motivated to learn how to successfully break into the field, enhance their existing abilities, or optimize their current approach to machine learning projects.

Whether you are just beginning your very first machine learning project or you are looking to explore advanced techniques that will help you scale your work and foster innovation, it is essential to adopt the right practical steps that will guide you towards success and efficiency in this dynamic field.

This comprehensive guide demystifies machine learning for novices while offering fresh strategies for seasoned practitioners. Drawing from credible industry sources and firsthand experience, we break down the Machine learning workflow—from initial concepts to deployment—covering fundamental theory, actionable code suggestions, and expert advice on state-of-the-art trends.

Why Machine Learning Practical Steps Matter

The practical steps involved in Machine Learning are critically important because they provide essential guidance that helps professionals at every level, from beginners to experts, successfully navigate and overcome the numerous common and significant challenges encountered in mastering and effectively applying machine learning techniques.

These steps serve as a structured framework that enhances understanding, improves problem-solving skills, and ensures more reliable and accurate implementation of Machine Learning models in real-world scenarios.

For Beginners

For beginners, the vast amount of theory and the seemingly complex nature of the field can often feel overwhelming, which may result in confusion and a lack of clear direction or focus. Practical, step-by-step guidance plays a crucial role in demystifying the world of machine learning and helps build confidence by breaking down complex tasks into smaller, manageable actions.

This approach enables beginners to start learning through hands-on experience and doing, rather than solely relying on absorbing abstract concepts without practical application.

For Intermediate Learners

Intermediate learners often encounter significant difficulties when it comes to selecting the most appropriate models for their specific tasks, tuning hyperparameters effectively, managing messy and imperfect real-world data, and evaluating their models reliably and consistently.

Providing clear, practical steps and guidelines can greatly assist them in navigating these challenges methodically and systematically. This approach helps optimize model performance while also enabling learners to handle the typical complexities and unexpected obstacles that arise during real-world projects, ultimately bridging the critical gap between theoretical knowledge and successful practical application.

For Experts

Experts are dedicated to continuously refining and improving deployment strategies, carefully adopting the latest cutting-edge tools, automating complex workflows through MLOps, and seamlessly integrating new paradigms such as AutoML or generative AI.

Their practical approaches are designed to ensure they remain highly efficient, consistently productive, and constantly innovative by rigorously confirming industry best practices and actively exposing themselves to the most recent and emerging advances in the field.

More broadly, adopting practical Machine Learning steps translates passive understanding into true proficiency. It accelerates learning curves by emphasizing hands-on experience, encourages creativity through experimentation, and fosters greater efficiency in workflows. This leads to better decision-making, cost reduction, and higher-impact outcomes across industries, from healthcare and finance to retail and beyond.

Specifically, this practical approach is designed to comprehensively address several key needs and requirements, including but not limited to:

- Enhancing overall operational efficiency by implementing automation for repetitive and tedious tasks, thereby significantly reducing the likelihood of errors and increasing accuracy. This approach not only streamlines workflows but also allows team members to focus on more strategic and value-added activities, ultimately boosting productivity and performance across the organization.

- Improving predictive accuracy by carefully tuning models and thoughtfully selecting the most appropriate algorithms for the specific task at hand. This process involves fine-tuning various parameters and experimenting with different algorithms to optimize overall performance and achieve the best possible results.

- Facilitating continuous improvement by enabling Machine Learning models to iteratively learn and adapt from new and evolving data over time. This ongoing learning process ensures that the models become more accurate and effective as they are exposed to additional information and changing patterns.

- Enabling scalable and robust deployments while implementing comprehensive monitoring strategies to ensure consistent and optimized long-term performance over extended periods.

- Supporting explainability and trustworthiness is essential for high-stakes applications where decisions have significant consequences. Ensuring that these systems are transparent and reliable builds confidence among users and stakeholders, fostering greater acceptance and responsible use in critical environments.

In essence, practical steps in machine learning are critical because they transform the entire learning process, making machine learning more accessible, actionable, and sustainable for everyone involved. These steps ensure that both learners and professionals are empowered to effectively convert complex data insights into meaningful and impactful real-world solutions.

Without such practical guidance, many individuals may find themselves overwhelmed, stalled, or hindered by the inherent complexity and inefficiency that often accompany raw data analysis and theoretical concepts. Therefore, implementing clear, practical steps bridges the gap between theoretical knowledge and successful application in real-world scenarios.

Key Concepts and Theories of Machine Learning Workflow

At any skill level, whether you are a beginner or an experienced practitioner, a typical machine learning (ML) project follows a well-defined and structured workflow composed of several critical and interdependent steps. These steps are designed to ensure that the project progresses smoothly and systematically from an initial concept or idea to a fully deployed, reliable, and performant machine learning model.

Below is a detailed and comprehensive explanation of each step involved in the Machine Learning workflow, carefully aligning with industry best practices and widely accepted methodologies that have been proven effective across various applications and domains:

Understand the Business Problem

Every successful machine learning (ML) project begins with a thorough and clear understanding of the specific problem you are aiming to solve, whether it is in a business context or within a research framework. This initial step is crucial and involves several important activities, including:

- Defining the problem statement precisely and in actionable terms (e.g., “Predict customer churn,” or “Automate invoice classification”).

- Choosing the right success metrics upfront so progress can be measured appropriately—such as accuracy, precision, recall, F1-score, or specific business KPIs relevant to stakeholders. This alignment ensures the model’s outputs have real-world impact and relevance.

Data Collection & Preparation

The overall quality and relevance of the data being used have a direct and significant impact on the performance and accuracy of the model, as the saying goes, “garbage in, garbage out.” This crucial phase encompasses several important steps, including:

- Data Collection: Gather data from trusted, relevant, and current sources. This might vary from databases, APIs, web scraping, or existing datasets.

- Preparation: Clean the data by handling missing or duplicated entries, encoding categorical variables into numeric form, and visualizing the data for outliers or anomalies.

- Splitting Data: Typically, the data is split into approximately 80% training and 20% testing subsets, sometimes including a validation set for larger projects or hyperparameter tuning.

- For example, in a medical diagnosis application, you would collect anonymized patient data, clean missing lab results, and perform exploratory visualization to understand diagnosis distributions.

Select the Model Type

Depending on the specific problem at hand and the unique nature of the data involved:

- Supervised Learning: Labeled data is used to predict outcomes (e.g., spam detection, house pricing).

- Unsupervised Learning: Identify inherent structure or patterns without labeled outcomes (e.g., clustering customer segments).

- Reinforcement Learning: Models learn optimal strategies via reward-based trial and error, useful for robotics or recommendation systems.

Build a Baseline Model

Especially for beginners who are just starting to explore the world of data science and machine learning, it is highly recommended to begin with simple and easy-to-understand models such as linear regression, logistic regression, or decision trees. These foundational models:

- Provide interpretable results.

- Set a baseline performance level to compare more complex models against.

- Help identify data or modeling issues early, before moving to advanced techniques.

Train & Tune Models

Training a machine learning model involves systematically feeding well-prepared and preprocessed data into the model using highly efficient and robust data pipelines. These pipelines are commonly constructed with powerful and widely used Machine Learning libraries such as Scikit-learn, TensorFlow, or PyTorch, which offer extensive tools and functionalities to streamline the process. The essential activities in this phase include:

- Hyperparameter tuning: Adjusting parameters such as learning rate or tree depth using methods like grid search or random search to optimize model accuracy.

- Ensemble methods: Combining multiple models via bagging, boosting, or stacking to improve predictive performance.

Well-tuned simple models frequently outperform much more complex models, especially when those complex models are poorly tuned or trained on data that is of low quality or insufficient relevance. This illustrates the critical importance of proper tuning and high-quality data in achieving superior model performance.

Model Evaluation

Carefully evaluate your model by using the most appropriate and relevant metrics that are specifically suited to the nature of the task you are addressing:

- Classification Metrics: Accuracy, precision, recall, F1-score, ROC-AUC.

- Regression Metrics: Mean Squared Error (MSE), Mean Absolute Error (MAE).

- Use cross-validation to prevent overfitting and ensure the model generalizes well to unseen data.

For imbalanced datasets, it is important to prioritize metrics like precision, recall, or the F1-score rather than relying solely on accuracy. These metrics provide a more meaningful and comprehensive evaluation of the model’s performance, especially when dealing with classes that have significantly different representation in the data.

Focusing on precision and recall helps to better capture the true effectiveness of the model in identifying relevant instances, while the F1-score offers a balanced measure by combining both precision and recall into a single metric.

Interpretability and Explainability

In domains that involve high stakes and significant consequences, such as healthcare and finance, the importance of explainability cannot be overstated, as it plays a crucial role in establishing trust with users and ensuring compliance with regulatory requirements.

When decisions impact people’s health or financial well-being, stakeholders need clear, understandable explanations for how those decisions are made to feel confident and secure. Techniques to achieve this include:

- Feature importance visualizations

- SHAP (SHapley Additive exPlanations) values

- LIME (Local Interpretable Model-agnostic Explanations) for interpreting individual predictions.

Model Deployment

Making the model available for real-world use involves:

Making the model accessible and functional for practical, real-world applications involves several key steps and considerations, including:

- Serving the model as an API or embedding it in applications, often using tools like Docker, FastAPI, AWS, Azure, or GCP.

- Monitoring model performance over time to detect drift or degradation.

- Setting up automated retraining pipelines using newly collected data to maintain model accuracy in production.

Stay Updated & Engage with the Community

The machine learning landscape evolves at an incredibly rapid pace, with new techniques, tools, and research emerging constantly. Therefore, continuous learning becomes vital for anyone involved in this field by:

- Reading recent academic and industry papers.

- Participating in forums such as Kaggle, Stack Overflow.

- Joining webinars, workshops, and challenges for hands-on experience and networking with peers.

When these steps are systematically and carefully followed, they provide practitioners with a comprehensive framework that guides them through every stage of the entire lifecycle of a machine learning project. This process begins with clearly framing the problem, continues through data collection, model development, validation, and finally culminates in deployment and ongoing maintenance.

By adhering to this structured approach, practitioners can ensure that their machine learning applications are not only efficient and well-organized but also accountable and capable of delivering significant, meaningful impact in real-world scenarios.

Comparison of Beginner vs. Intermediate vs. Expert Practical Steps

Here is a clear and detailed comparison of practical machine learning steps across different skill levels—Beginners, Intermediates, and Experts—carefully structured by the key steps involved in the entire machine learning workflow. This overview aims to provide a comprehensive understanding of how each level approaches the essential stages of Machine Learning projects.

| Step | Beginners | Intermediates | Experts |

|---|---|---|---|

| Problem Definition | Simple, single task | Complex multi-label tasks | Multi-objective, business-aligned |

| Data Handling | Pre-cleaned datasets | Real-world messy data | Data lake and big data processing |

| Model Selection | Simple models (e.g., linear, logistic regression, decision trees) | Ensemble and advanced models (random forests, gradient boosting) | Custom architectures, transfer learning, deep learning variants |

| Tuning | Default parameters | Manual hyperparameter tuning, cross-validation | Automated tuning (Bayesian optimization, AutoML frameworks) |

| Evaluation | Train/test split | Cross-validation | Robust statistical analysis, advanced validation techniques |

| Deployment | Local scripts or notebooks | Basic web APIs for serving models | Scalable deployment with CI/CD pipelines, full MLOps integration |

| Explainability | Basic feature importance plots | LIME/SHAP for local/global explanations | Regulatory-grade Explainable AI (XAI) methods, interpretable model governance |

Explanation:

- Problem Definition: Beginners focus on simple, clear tasks to get started without complexity, intermediates handle tasks with multiple labels or facets, while experts align Machine Learning goals with multiple business objectives and enterprises’ strategic priorities.

- Data Handling: Beginners typically use clean, pre-processed datasets (like curated educational datasets), intermediates work with messy, real-world data requiring significant cleaning and feature engineering, and experts operate on large-scale data lakes, integrating big data technologies to handle volume, velocity, and variety of data.

- Model Selection: Beginners start with straightforward algorithms good for learning and interpretability. Intermediates explore ensemble models for better accuracy. Experts develop or adapt custom deep learning models, employ transfer learning, or design architectures for specific domains.

- Tuning: Beginners rarely tune much beyond defaults. Intermediates tune manually and use cross-validation to improve performance. Experts deploy automated hyperparameter optimization using Bayesian methods or employ AutoML to save time and optimize results.

- Evaluation: Beginners rely on simple train/test splits and basic metrics, intermediates utilize robust cross-validation schemes to better assess generalization, and experts perform in-depth statistical analysis to validate models rigorously.

- Deployment: Beginners run scripts locally or in notebooks. Intermediaries set up simple APIs to integrate models with applications. Experts establish scalable, continuous integration and deployment pipelines and full MLOps practices to automate model delivery, monitoring, and updating.

- Explainability: Beginners typically review feature importance for intuition, intermediates use LIME or SHAP for detailed, interpretable explanations, and experts adhere to strict regulatory frameworks with advanced XAI methods to ensure transparency, fairness, and compliance.

This detailed comparison significantly helps clarify how the practical steps involved in machine learning evolve progressively as one gains more expertise, clearly reflecting the increasing complexity, larger scale, greater rigor, and higher levels of automation that are introduced into workflows over time.

Additionally, this comparison serves as a valuable guide for learners and professionals alike, assisting them in effectively scaling their skills and refining their approach in a manner that is appropriate to their current level of knowledge and experience.

Current Trends and Developments in Machine Learning (2025)

In the year 2025, machine learning (ML) continues to evolve at an exceptionally rapid pace, propelled not only by ongoing technological innovations but also by an ever-increasing demand for intelligent, scalable, and efficient solutions across various industries. These advancements are transforming how data is processed, analyzed, and utilized.

Here are the most important current trends and significant developments that are actively shaping and defining the machine learning landscape throughout this year:

Automated Machine Learning (AutoML)

- Platforms like Google AutoML and H2O.ai automate model selection, hyperparameter tuning, and feature engineering.

- AutoML democratizes machine learning, making it accessible to non-experts while accelerating workflows for seasoned practitioners.

Generative AI & Foundation Models

- Large multimodal foundation models (e.g., GPT-4.5, Gemini) generate text, images, audio, and video with impressive quality.

- Custom fine-tuning enables businesses to tailor these models for domain-specific tasks such as legal or medical applications.

- Low-code/no-code AI tools ease adoption among non-technical users.

Explainable AI (XAI)

- Transparency is increasingly critical, especially in regulated, high-stakes industries like healthcare and finance.

- Techniques like SHAP, LIME, and feature importance visualizations are widely adopted to ensure model interpretability and regulatory compliance.

Edge AI & TinyML

- Machine Learning models are increasingly deployed directly on devices (smartphones, sensors, wearables) to reduce latency and preserve privacy.

- These edge models are optimized for energy efficiency and real-time insights, enabling applications such as health monitoring and autonomous systems.

MLOps and Continuous Deployment

- The full Machine Learning lifecycle management—versioning, scalable deployment, continuous monitoring, and automated retraining—has become standard for mature teams.

- CI/CD pipelines and robust monitoring ensure model performance and reliability over time, supporting enterprise-scale Machine Learning production.

Additional notable trends include:

- Machine Unlearning / Digital Data Forgetting: Selective removal of data to improve privacy and reduce storage overhead.

- Interoperability between Neural Network Frameworks: Standards like ONNX facilitate smoother collaboration and model reuse across platforms.

- Integration with IoT, 5G, and Blockchain: Enhancing secure, real-time, and scalable Machine Learning applications across industries.

- Advances in Quantum Computing and AR: Emerging technologies poised to accelerate deep learning capabilities and personalized experiences.

These advancements reflect a strong emphasis on democratizing artificial intelligence, making it more accessible to a wider range of users and industries. They focus on significantly improving efficiency, enhancing transparency in AI processes, and enabling greater scalability of machine learning solutions.

Additionally, these developments aim to embed machine learning technologies seamlessly across a diverse array of devices and various industry sectors. As a result, enterprises are increasingly relying on machine learning not only to drive innovation but also to optimize their operational workflows and deliver smarter, more personalized user experiences.

Case Study: Practical Steps for Implementing Machine Learning

The case study you presented aligns exceptionally well with both real-world applications and research-supported machine learning (ML) methodologies specifically designed for predicting customer churn in the e-commerce sector.

Below is a comprehensive and detailed breakdown that not only validates each step of your approach but also expands upon it significantly. This includes valuable insights drawn from recent, authoritative sources and cutting-edge research within the field of machine learning and customer behavior analytics:

- Define Objective: Reducing churn by a specific target (e.g., 10% in the next quarter) is a great measurable business goal. Clarity here is crucial since objectives guide metric selection and model design. Studies emphasize aligning churn predictions with actionable retention goals to maximize business value rather than just model accuracy.

- Data Collection: Gathering diverse data such as historical purchases, site activity, and customer support logs is consistent with best practices. Comprehensive and multi-source data allow the model to better capture customer behavior patterns predictive of churn.

- Preparation: Cleaning duplicates, filling missing values, and feature engineering (e.g., login frequency, average purchase value) strongly influence model quality. Feature importance analyses in churn prediction commonly highlight engagement metrics and purchase history as top predictors.

- Model Selection: Starting with logistic regression for a binary classification problem (churn or not) fits the recommended approach for baseline models. Logistic regression offers interpretability and quick benchmarking. More advanced models like random forests and XGBoost, which you plan to try, are empirically shown to capture complex nonlinear relationships and improve predictive accuracy in e-commerce churn data.

- Training & Tuning: Employing grid search for hyperparameter tuning is an industry-standard method to optimize model performance. Research confirms that tuning parameters for random forests and XGBoost substantially boosts their effectiveness. Since churn data often suffers from class imbalance (few churners among many non-churners), techniques like SMOTE (Synthetic Minority Over-sampling) can be used, though not explicitly mentioned in your case study, it is widely recommended for better recall (catching actual churners).

- Evaluation: Focusing on recall to reduce false negatives is a sound business approach since missing likely churners leads to lost retention opportunities. In churn prediction literature, recall and F1-score are preferred evaluation metrics over raw accuracy, especially with imbalanced datasets.

- Deployment: Serving the model via API to integrate with email marketing for retention campaign triggering reflects a real-world application, where Machine Learning outputs drive operational decisions. Using APIs ensures flexibility and scalability in connecting predictions to customer engagement platforms.

- Monitoring: Tracking live model performance and updating with new data aligns with best practices in MLOps (Machine Learning Operations), ensuring that the model adapts over time and maintains accuracy despite changing customer behaviors or market dynamics.

- Reflection & Impact: Achieving a 12% churn reduction demonstrates the tangible business value of a well-executed ML churn model. Studies highlight that data-driven retention strategies informed by Machine Learning predictions significantly enhance customer loyalty and profitability compared to intuition-driven efforts.

Additional Insights & Recommendations:

- Handling Class Imbalance: When working with datasets that are imbalanced, which is a common scenario in churn prediction tasks, it is important to carefully manage this imbalance to improve model performance. Consider employing various resampling techniques, such as oversampling the minority class or undersampling the majority class, to create a more balanced dataset. Additionally, adjusting classification thresholds can help optimize the model’s recall, allowing it to better identify true positive cases of churn. This should be done thoughtfully to avoid a significant rise in false positives, ensuring that the model remains both sensitive and reliable in its predictions.

- Explainability: Utilizing advanced model interpretation tools such as SHAP or LIME can significantly aid in pinpointing the most influential features that are driving customer churn. By clearly identifying these key factors, businesses can implement more targeted and effective interventions to reduce churn rates. Furthermore, this enhanced transparency fosters greater trust and confidence among stakeholders, as they gain a clearer understanding of how the model arrives at its predictions and decisions.

- Ensemble & Hybrid Models: Exploring various ensemble methods such as random forests and boosting techniques, as well as hybrid models that effectively combine machine learning algorithms with recommendation systems, can significantly enhance and further improve the overall predictive performance and accuracy. These approaches leverage the strengths of multiple models to achieve better results.

Continuous Learning: Consistently retraining the model using fresh, up-to-date data along with incorporating feedback loops derived from ongoing marketing campaigns can result in sustained, long-term improvements.

This approach enables the development of adaptive retention strategies that evolve dynamically in response to changing market conditions and customer behaviors, ultimately enhancing overall performance and effectiveness.

FAQs

Do I need advanced math to start machine learning?

While advanced math (like linear algebra, calculus, and probability) deepens your understanding of Machine Learning algorithms, beginners can start effectively with practical projects and gradually learn foundational math concepts as needed. Many learners begin by focusing on coding and applying existing ML libraries rather than deriving algorithms from scratch.

Which programming language should I learn?

Python is the de facto standard language for machine learning due to its simplicity and the vast ecosystem of Machine Learning libraries (Scikit-learn, TensorFlow, PyTorch). R is also popular, especially for statistical modeling and visualization. Starting with Python is highly recommended for beginners, as it allows easy experimentation and access to community resources.

How do I choose the right model for my data?

Start with simple, interpretable models such as linear or logistic regression and decision trees. Use cross-validation to compare performance among models and let your problem type (classification, regression, clustering) and evaluation metrics (accuracy, recall, F1-score) guide model selection. As you grow, explore more complex models like ensemble methods or deep learning tailored to your dataset’s size and complexity.

What are the top machine learning trends in 2025?

Key trends to watch for in 2025 include several significant developments across various industries and sectors, reflecting evolving technologies, consumer behaviors, and global priorities. These trends are:

- Automated Machine Learning (AutoML) platforms that automate model selection and parameter tuning

- Generative AI and large foundation models that create text, images, and more

- Explainable AI (XAI) for transparency in high-stakes applications

- Edge AI and TinyML, deploying models on devices for real-time, privacy-sensitive tasks

- Mature MLOps practices enable continuous deployment, monitoring, and retraining of models.

How do I keep my Machine Learning skills up to date?

Stay engaged by joining the Machine Learning community through forums like Kaggle, Stack Overflow, and ArXiv; follow leading conferences (NeurIPS, ICML); participate in webinars and workshops; and work on diverse, real projects. Continuously learning new tools, techniques, and theoretical advancements enables adaptation to this rapidly evolving field.

These answers provide a well-balanced combination of practical entry points that are ideal for beginners just starting out, along with insightful pointers that guide learners toward ongoing growth and the latest advanced trends in the field. This approach makes machine learning both accessible to newcomers and comprehensive enough to support continued development and deeper understanding.

In Conclusion

Machine learning practical steps serve as a clear path for anyone—regardless of their current skill level—to develop meaningful, real-world expertise in this dynamic field. Building a strong foundation, engaging in consistent hands-on practice, and staying agile in the face of technological shifts are the keys to lasting success and advancement.

- For beginners, the critical action is to immerse yourself in straightforward, practical projects. Rather than being held back by abstract theory, focus on experimenting, coding, and iteratively improving simple models. This experiential approach quickly builds confidence, provides tangible wins, and lays the groundwork for deeper conceptual learning.

- Intermediate practitioners are encouraged to push boundaries by grappling with complex, messy real-world datasets, experimenting with advanced algorithms, and automating parts of their workflow. This progression ensures a robust skill set that bridges the gap between foundational understanding and professional Machine Learning practice. It is through continual exposure to real business or research challenges and persistent skill refinement that your knowledge matures and your models become truly impactful.

- Experts play a pivotal role in shaping and scaling ML adoption. Embracing next-generation paradigms—such as Automated Machine Learning (AutoML) and Generative AI—enhances both efficiency and innovation. Seasoned professionals excel by optimizing deployment pipelines for scalability (MLOps), ensuring models are robust and reliable in production, and fostering a culture of shared best practices, continuous learning, and peer mentorship within their teams and organizations.

Machine learning isn’t a destination, but a continuous, collaborative journey. Technology evolves, data grows, and new opportunities for impact arise every day. Whether you’re taking your very first step or refining enterprise-scale systems, the most important habits are to start now, experiment boldly, learn from every iteration, and remain open to new techniques and perspectives. This approach ensures you not only keep up with, but thrive amidst, the fast-changing landscape of modern data science.

Discover more from Skill to Grow

Subscribe to get the latest posts sent to your email.